Wrap up

What was Puzzlebox?

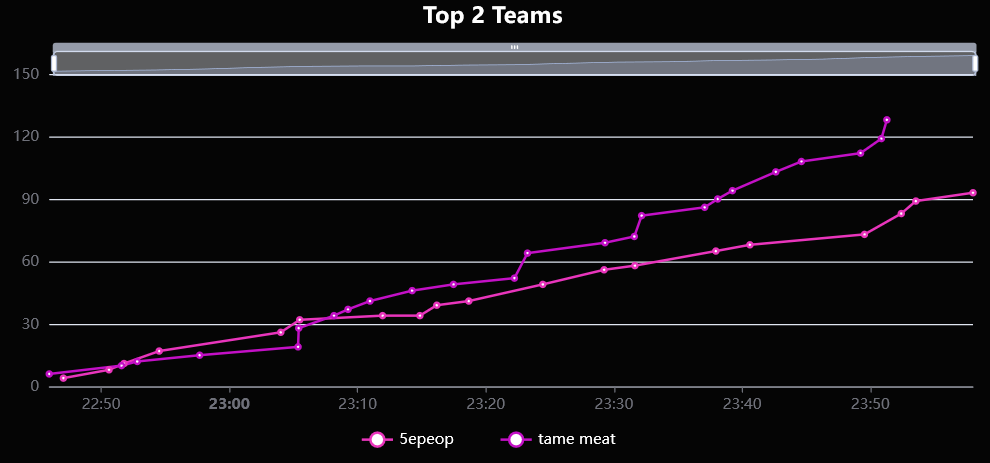

Puzzlebox was a holiday event that was run independently for two groups: ✈✈✈ Galactic Trendsetters ✈✈✈ and teammate. This was a 75-minute event where two teams were competing head-to-head. Each team had ~10-12 people on it. Puzzles were released globally every 2 minutes. In total, there were 30 puzzles.

✈✈✈ Galactic Trendsetters ✈✈✈: Perfectly balanced! even after the very last minute point gains from both teams!

teammate: I didn't notice the teams were lopsided here until we started... I thought about moving a person, but it looked even enough very early on. I shoud have moved them.

For everyone who played, thanks for playing: I didn’t realize until later that I was taking the huge audience for granted. Finally, I am super appreciative of Sushi and Benji for testsolving this event: they spent several hours testing every single puzzle in the days leading up to it.

If you’d like to try the puzzles, this is the static site and the puzzles are linked at the top. The rest of this post will contain some iykyk spoilers.

Philosophical Musings

Experimenting with format and scoring:

I was fairly set on a competitive event format where not all puzzles would be solved. I initially wanted a lockout style format, but someone else was writing something different using lockout/bingo. I also considered a buzzer/quiz-bowl style event, but that was too serial for 30 puzzles and some of the puzzles I wrote ended up being too long. Ultimately, I settled on a point-based system for each puzzle. Here are some other ones I considered.

- CTF: puzzles are worth N/#num teams who have solved. This wouldn’t make sense for only 2 teams, but for a larger-scale event it could be interesting.

- Bounties increase: each puzzle's value increases each minute it remains unsolved. Once the first team solves it, it goes back to the base value.

- Shared bonuses: every team who solves a puzzle gets a bonus proportional to the inverse time they took vs. the time other teams took. If ta is the time team a took, then their bonus is ta-1/Σti-1. It's kind of like harmonic proportion. If I had a simple way to explain it or if we actually had 3 or more teams, I would have wanted to try shared bonuses. Then the bounties increase also sounded strategically interesting (“do I sit on the answer for 1 more minute to earn a point, at the risk the other team will solve it”?) but I didn’t have time to look into implementing.

Puzzle quality and length:

As a timed head-to-head format, all teams would have the same experience and hit the same roadblocks, unfair steps, etc. and all is (hopefully) forgiven by the end of the event.I think I'll never be forgiven about the glass coffin.

In particular, this let me get away with things that usually would not fly in any regular puzzle event. This also saved me a ton of time in writing the puzzles, without which I don’t think the event would have been possible. For example:

- Puzzles don’t need to be good.

- Puzzles don’t need to be fun.

- Puzzles can and should be skipped.

- Puzzles can be short or long, as long as teams know ahead of time that it is a long/short puzzle so teams don’t get “unlucky” picking the wrong one (like on Only Connect).

- Some ideas I had at the time was a difficulty/star rating like Puzzled Pint/Australian puzzles or base score value (like a test or programming competition).

- Puzzles should have unique solutions, but they don’t need to as long as there’s incentive/acceptable cheese to get someone to submit the right thing without hurting your team.

- 11/15 might not be unique, 11/16, 11/18 were not unique until testsolving (although they were solved in testing). The existence of a "Fox Squirrel" (11/25) is unfortunate and some clues might not be unique. Even 11/19 might have some issues.

Rejecting conventions

One day, I wish to write a puzzle-based event that I can give to both my friends who solve puzzles and friends who do not, and have both groups find the event fun. I think one part of that is to reject conventional “puzzle” wisdom. This meant that I did not intend on writing a meta, which also allowed for some puzzle ideas that had extremely constrained answers (like the 11/9).

The other dimension is to avoid all ciphers/encodings, and to give blanks and extraction early on if possible. I think many people get introduced to puzzles through things like College Puzzle Challenge or Puzzled Pint, but there is so much more elegance to puzzles than Morse Code and Flag Semaphore.

Giving away enumeration lengths prevented someone from guessing incorrectly for 11/18. I also wanted to avoid indexing as much as possible (except for 11/22, which is not conventional indexing anyway).

Finally, I was okay with single-step short puzzles with a single aha, like 11/4, 11/29 or 11/30. Many of these single ahas would actually not be fun to do twice or in a “full” “hunt-sized” puzzle. Relatedly, many of Deusovi’s White Day Puzzles felt like a successful execution of some of these convention rejections too.

Writing Schedule

I started thinking about the puzzle-of-the-day after waking up or during the day and usually started writing at ~10pm. Sometimes at 11pm. One time, it was already past midnight (oops)

This forced me to keep it short as I weighed bedtime against finishing the puzzle. I struggled with several versions of 11/6 grid and words to get a better fill but failed I wanted 11/6 to be solvable by eyeballing, unfortunately I don’t think that was possible. Similarly, I ran out of time on 11/26

Weekend ones: I sometimes had more time, but didn't spend too much more time than I did on weekdays. I suspected 11/18 was not unique going into testsolving, and it had to be rewritten to be (hopefully) unique.

If I could remember my dream from the previous night, I tried to use something from that as inspiration. It gave me an easy constraint and my dreams are wack.

Tech

A short note on tech: I actually tried tph-site first, ran into some docker errors, switched to gph-site, ran into some gph-site deploy errors… and then decided I’d run everything on staging. The tech definitely isn’t polished but at some point I had to stop worrying about polish and actually implement the scoring system.

March update: staticifying the site took way too long. I had to learn about how to move all the files to /puzzles and fix all the urls because I still don’t know how to make /foo.html redirect to /foo. Also, merging the DBs between ✈✈✈ Galactic Trendsetters ✈✈✈ and teammate took longer than expected because Google Sheets adds a zero-width whitespace to their time format, and something still looks off with it.

How long did this take me?

I think actually not that long, and would have been much less if I didn’t have to rewrite the last 10 puzzles. This is what I recorded in December, with some post-event additions to this list:

- Server cost ~$20 + another ~$20 since I didn’t take the server down until March.

- Writing initially (November): 30-40 hours - a little over 1hr/day

- Initial tech: 4 hours

- Initial postprod: 3 hours

- Rewrites: 6 hours

- More tech and prep for testsolving: 2 hours

- Testsolving + edits: 7 hours

- More edits: 2 hours

- Scoring and format prep: 4 hours

- Solutions + authors notes: 4 hours

- DB merging: 3 hours

- Staticifying site: 5 hours

- Wrapup: 4 hours

- Running the events: 3 hours

In other words:

- Puzzle writing: ~40-50hrs (most in November)

- Tech and postprod: 13hrs

- Testsolving: 7hrs

- Running: 3 hours

- Posthunt work (wrapup, staticification, etc): 15 hours (never would have guessed this, which is partially why this wrapup is out so so late.)

This is about 60-70 hours pre-hunt and less than 90 hours in total.